What to Know About Kubernetes Ingress

This blog post is the 2nd in a 4 part series with the goal of thoroughly explaining how Kubernetes Ingress Works:

- Kubernetes Networking and Services 101

- Ingress 101: What is Kubernetes Ingress? Why does it exist? (This article)

- Ingress 102: Kubernetes Ingress Implementation Options

- Ingress 103: Productionalizing Kubernetes Ingress (coming soon)

Kubernetes Ingress is an advanced topic that can’t be truly understood without first having an understanding of the many fundamentals that Kubernetes Ingress builds upon, for that reason, If you haven’t already, I highly recommend you read Kubernetes Networking and Services 101 post, which includes a refresher on several fundamentals.

What is Kubernetes Ingress? The short answer

Ingress means to enter. Kubernetes Ingress refers to external traffic being routed to a Kubernetes Ingress Controller, which is really just a Layer 7 Load Balancer that exists inside of a cluster. The Ingress Controller dynamically configures itself based on Ingress yaml objects, which are snippets of configuration that declaratively describe desired Layer 7 Routing.

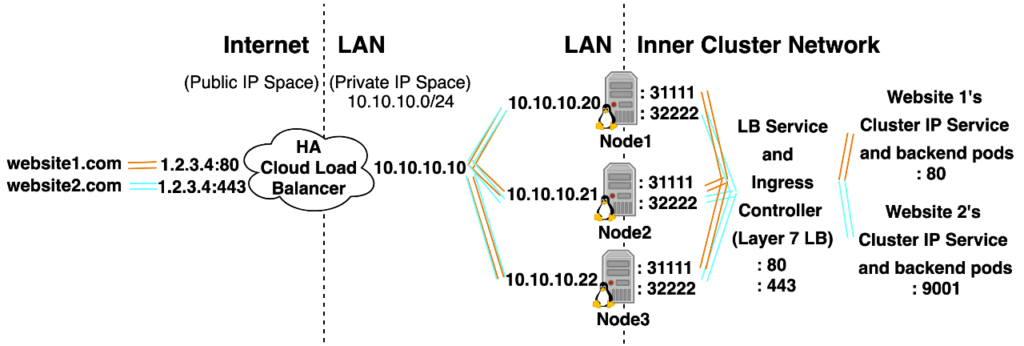

How Traffic Flows Into a Kubernetes Cluster using an Ingress Controller and Ingress YAML Object

What’s not covered in the diagram above is that inside etcd, there will exist an Ingress Yaml object and TLS Kubernetes Secret for both websites 1 and 2. The Ingress Controller Deployment Yaml Specification will also reference a Kubernetes Service Account with RBAC permissions that will allow the spawned L7 LB pods to read the contents of TLS secret and Ingress Yaml object from etcd.

Each Ingress object will reference a TLS kubernetes secret and declare that traffic for https://website1.com:443 should be directed to Website 1’s Cluster IP Service port 80, and traffic for https://website2.com:443 should be directed to Website 2’s Cluster IP service port 9001. The Ingress Controller is implemented as a Layer 7 Load Balancer that dynamically configures itself based on Ingress Objects, when it receives traffic from the Cloud LB. Because it operates at Layer 7, it will know how to terminate HTTPS, distinguish each website’s traffic since it understands url names and paths, and know which backend server to forward traffic to.

Why do Ingress Controllers Exist? What problems do they solve?

The first post in this series showed that service type LoadBalancer can be used to allow traffic from the internet to reach an inner cluster service. Technically you could use service type LoadBalancer 100% of the time and not use an Ingress Controller at all. So what are the advantages of using an Ingress Controller? What problems does it solve?

- Ingress cuts down on Infrastructure Costs: If you wanted to expose 30 services so they were reachable from the public internet then using service type LoadBalancer would create 30 cloud load balancers outside of your cluster and you pay for each of those. If you use an ingress controller, you only have to use service type LoadBalancer once to expose the ingress controller and you only have to pay for the single load balancer.

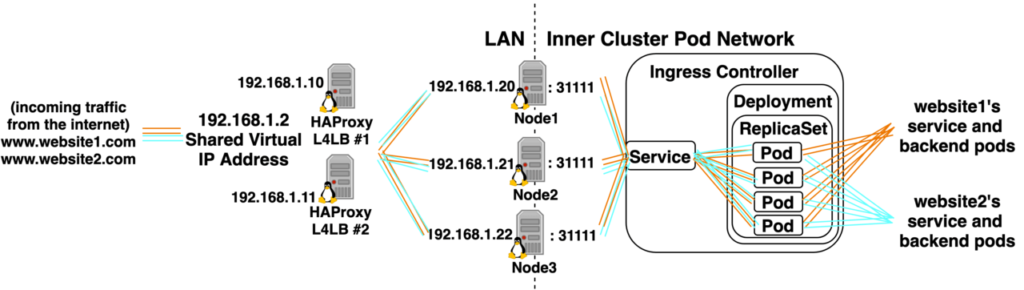

- Scalable Load Balancer Architecture: Layer 7 (http/application) LBs offer more features than Layer 4 (port/network) LBs, but Layer 7 LBs can’t handle nearly as much traffic as L4 LBs. A common Load Balancer Architecture emerged to allow all the features of L7 LBs with the scale of L4 LBs. Start with a HA pair of L4 LBs and use them to load balance between multiple L7 LBs. (Let’s take a more detailed look at one of our Ingress Controller diagrams.)

The pods inside the ReplicaSet are L7 Load Balancers, which are dynamically configured based on Ingress objects. There’s a pair of HA L4 LBs outside the cluster which are load balancing traffic to 4 replicas of a L7 Load Balancer inside the Cluster.

- Ingress objects allow load balancer configuration to be managed in a distributed fashion: This eases configuration management in a few ways:

- First, you manage multiple small files instead of 1 massive loadbalancer.config file that 10 different people want to experiment with at the same time.

- Second, it offers a great way to separate stable load balancer configuration from experimental load balancer configuration, stable ingress objects end up in git master branch, experimental load balancer configuration ends up on feature branches.

- Third, Ingress objects enable self service workflows. Once the ops team sets up an environment with appropriate guard rails, developers can update load balancers themselves without having to rely on ops by simply deploying a new ingress object.

- Ingress Controller Software Defined Load Balancers that configure themselves based on the Ingress API Standard introduces consistency, which offers tons of benefits:

- Portable Load Balancer Logic that offers consistent Load Balancer functionality and application experience regardless of where and how you host your Kubernetes Cluster. (If you limit your hosting provider to doing dumb L4 Routing, which all providers offer and isn’t hard to do in bare metal setups, and you implement advanced L7 functionality and routing logic using software that runs in your cluster, you get really portable configurations that run the same regardless of where they’re hosted.)

- For the first time ever Nginx, HAProxy, Traefik, Envoy, and options that don’t even exist yet. Completely different families of Load Balancers gain some level of reusable configuration in terms of basic functionality. -Basic configuration becomes easier to write, templatize, and automate. -You don’t need specialized knowledge to kick the tires and test basic functionality of a different flavor of Ingress Controller.

- Kubernetes TLS Secrets standardized on the PEM format of HTTPS certificates, google “tls certificate file format” and you’ll quickly get an idea of how awesome this alone is. (The TL;DR version is Kubernetes standardized HTTPS certificates into 1 format, as opposed to the 15++ different formats they can exist in.)

How do I get Kubernetes Ingress Working?

Ingress Yaml Objects are just snippets of Load Balancer configuration, they don’t do anything by themselves. If you want your ingress rules / ingress yaml objects to stop being ignored and function as intended you’ll need to deploy an Ingress Controller (self configuring L7 LB) into your cluster. This controller will also use service type LoadBalancer to spawn a HA Cloud LB outside of the cluster and automagically configure it to forward traffic to the Ingress Controller. Unfortunately, there are a few pieces of configuration that can’t (easily) happen automagically:

- For starters, you need a way to direct http traffic to the HA Cloud LB, this usually involves configuring DNS so websites defined in your ingress yamls resolve to the IP of the HA Cloud LB.

- Configuring HTTPS/TLS Certificates.

- There’s also the fact that Ingress Controllers are built on the Kubernetes Service of type LoadBalancer, which doesn’t work across all implementations of Kubernetes, and works differently depending on which Cloud Service Provider Kubernetes is hosted on.

Luckily there’s a site with templates that make navigating the configuration options a little easier: https://kubernetes.github.io/ingress-nginx/deploy. This site splits up the commands to deploy an Nginx Ingress Controller into 2 parts:

- The “Cloud Provider Specific Command” (Which is a service of type LB or NodePort that depends on the environment you’re deploying to) Here are the commands to provision an AWS L4 LB Service:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/aws/service-l7.yaml kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/aws/patch-configmap-l7.yaml

- The “Mandatory Command is required for all deployments” (Which is the actual Nginx Ingress Controller Itself)

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

Let’s go a bit deeper into what an Ingress Controller is/what’s in these yaml files:

- A Namespace: For all Ingress related yaml objects to exist in.

- An LB Service, which:

- Uses Cloud Provider APIs to provision a HA Cloud LB existing outside the cluster.

- Automagically configures the external LB to forward to the Dynamic IP of every worker node in your cluster regardless of scale up/scale down.

- Automagically configures the external LB to forward to the NodePorts that kube-proxy maps to ports 80 and 443 of the Ingress Controller Kubernetes Service existing in your cluster.

- Configmaps: Containing sensible default configurations.

- Two Deployments:

- Replicated Pods that make up the Ingress Controller / Self Configuring Layer 7 LB.

- And a default-backend deployment which acts as a 404 not found page for traffic that gets sent to the Ingress Controller without a matching rule.

- A ServiceAccount, ClusterRole, Role, RoleBinding, and ClusterRoleBinding: An Ingress Controller is a pod running some software that needs RBAC rights to read Ingress and Kubernetes TLS Secret Objects that exist in the cluster so it can auto configure itself. You need to have an identity that RBAC rights can be assigned to. In the case of a Human Kubernetes Admin with cluster-admin RBAC rights to the cluster, RBAC rights are assigned to a cert which is embedded in ~/.kube/config that exist on a human’s Computer. In the case of a pod that exists in the cluster we attach rights to a ServiceAccount, and we attach the ServiceAccount to the pods that make up the Ingress Controller. This way the Ingress Controller Pods have kubectl rights to read Ingress and Secret Objects in your cluster, so they can do their job of self configuring themselves.

Tips for Troubleshooting Ingress

Let’s say you’re new to Kubernetes and you want to gain some hands on experience with Kubernetes and Ingress. AWS, Azure, and GCP each have the ability to easily deploy a Kubernetes Cluster. If you don’t already have access to those through an employer, Azure and GCP offer solid free trial subscriptions. Once you have kubectl access to a Cloud Provider hosted Kubernetes Cluster, you can use the following commands to deploy a web server in your cluster, an Ingress Rule to describe it, and an Ingress Controller to route traffic to it.

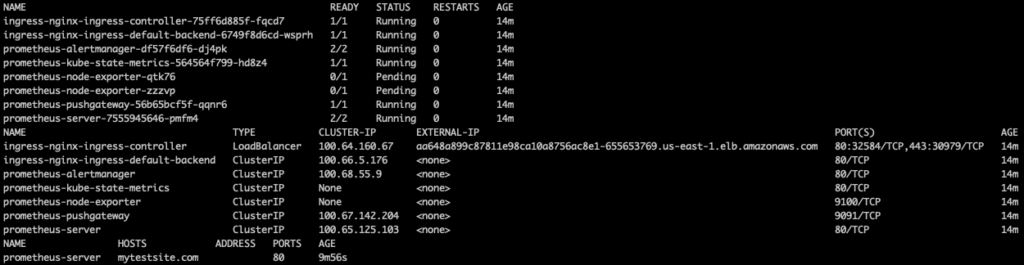

mkdir ~/temp; cd ~/temp; mkdir yamls kubectl create namespace test kubectl config set-context --current --namespace=test helm fetch --untar stable/prometheus helm fetch --untar stable/nginx-ingress helm template prometheus --name prometheus --set server.ingress.enabled=true,server.ingress.hosts[0]=mytestsite.com --output-dir ./yamls helm template nginx-ingress --name ingress --output-dir ./yamls kubectl apply -f yamls/nginx-ingress/templates/ kubectl apply -f yamls/prometheus/templates/ --validate=false kubectl get pod; kubectl get service; kubectl get ingress

kubectl port-forward to bypass the ingress controller entirely. kubectl port-forward leverages a kubectl certificate to create an encrypted TLS tunnel to the kube-apiserver and socat to set up a temporary bidirectional traffic routing process that can route traffic between you, the kube-apiserver, and a pod or service in the cluster.

kubectl port-forward service/prometheus-server 9001:80

Chrome: http://localhost:9001 ^You’ll see the website in your cluster! And although you’re connecting over unencrypted http, your traffic is being routed through an encrypted temporary vpn tunnel. T-Shoot Tip #2: You can test that the website you exposed over ingress works even if you don’t have rights to configure DNS: You don’t own your own domain name, you don’t have rights to your company’s domain name to point a test.mycompany.com subdomain to your Ingress Controller’s LB, You don’t have rights to edit your company’s LAN DNS, and you don’t want to initiate a slow change control process request just to do a simple play test. Well the good news is, that’s what host files are for. kubectl get service shows the EXTERNAL-IP of the provisioned load balancer, in the case of AWS LBs, they use DNS names to save Public IPs, but we can figure out a Public IP belonging to the load balancer by pinging it, and use that to create new entries in our /etc/hosts file, which is a locally significant DNS override.

ping aa648a899c87811e98ca10a8756ac8e1-655653769.us-east-1.elb.amazonaws.com

PING aa648a899c87811e98ca10a8756ac8e1-655653769.us-east-1.elb.amazonaws.com (54.86.36.163): 56 data bytes

sudo nano /etc/hosts

54.86.36.163 mytestsite.com

54.86.36.163 mylb.com

You can use:

head /etc/hosts ping mytestsite.com ping mylb.com

…to verify that the locally significant dns override is working: Now when you enter: Chrome: http://mytestsite.com You’ll see your website! Just be aware that this time your traffic will be unencrypted. Also when you enter: Chrome: http://mylb.com You’ll see “default backend – 404” This message comes from the Ingress Controller’s default-backend deployment that runs in your cluster. T-Shoot Tip #3: Resolve DNS using Google’s DNS Servers to validate if a Public Internet DNS update went through without having to wait for the DNS update to propagate to your company or ISPs DNS Server: Let’s say you update a public internet DNS entry with a time to live of 1 minute, but 2 minutes have gone by and ping mynewsite.com isn’t resolving. You think the update just needs more time to propagate to more public internet DNS servers, but you also don’t want to wait a day only to find out that you did the update incorrectly. Google’s DNS servers are usually among the first to update. So a good command to use is

nslookup mynewsite.com 8.8.8.8

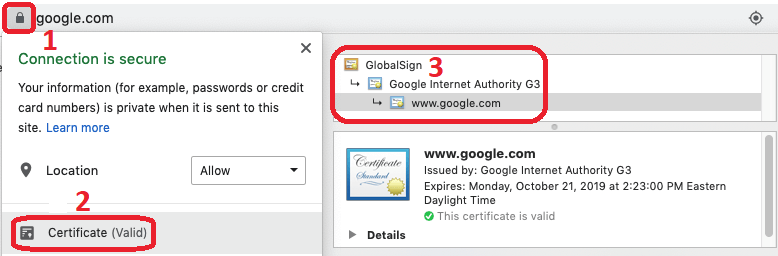

T-Shoot Tip #4: You can click the HTTPS lock in your browser to gain information that can be of use when troubleshooting HTTPS configurations: If you configure HTTPS and it’s not working the first thing you should try is explicitly typing https:// in the browser before typing your website, many production grade websites will auto redirect, your test site/ingress controller won’t do this without some additional configuration. If that doesn’t help there’s probably an issue with the cert. It could be that you manually created a Kubernetes TLS secret by hand and you did it wrong or you could have a cert that’s valid for a domain name, just not the domain name you’re using. Either way clicking the lock to the left of the domain name can sometimes give you enough details to help you resolve your issue.

Conclusion

Oteemo thanks you for reading this post, if you want to find out more as to DoD Iron Bank services, DevSecOps adoption or Cloud Native Enablement, contact us by submitting our form via our website.

We will be happy to answer all your questions, especially the hard ones!

The pods inside the ReplicaSet are L7 Load Balancers, which are dynamically configured based on Ingress objects. There’s a pair of HA L4 LBs outside the cluster which are load balancing traffic to 4 replicas of a L7 Load Balancer inside the Cluster.

The pods inside the ReplicaSet are L7 Load Balancers, which are dynamically configured based on Ingress objects. There’s a pair of HA L4 LBs outside the cluster which are load balancing traffic to 4 replicas of a L7 Load Balancer inside the Cluster.

0 Comments