In today’s modern technological landscape, we hear a lot about cloud native and containerized solutions, but does that mean you should jump onto the newest technology bandwagon? That depends.

We live in an amazing time, when there are a variety of excellent deployment solutions for application teams wanting to make the next latest and greatest, many of which support the most modern in Continuous Integration and Continuous Deployment (CI/CD), with solutions for both On-Premise and Cloud. Many of those solutions are not containerized and provide great performance, observability, reliability, and scalability. So why would you choose containerized solutions in the first place?

Why Containerize?

Containerized Solutions provide interfaces and build processes for constructing your application and its build instructions into a packaged unit called a Container. Whether you are building an application in Go, Node, Python, Ruby, Java, F#, or any other programming language, the general format for compiling and packaging the build is the same. Even more powerful, you can now run all of those different applications and workloads, built in different languages, on the same exact hardware architecture easily. No need to spin up and configure separate servers for the different application systems, and worry about maintaining them separately.

Containers are also not limited to running on one server each. Many can share the same set of servers, or can run on a serverless infrastructure, which shares a collection of servers behind the scenes. They can be configured to dynamically scale horizontally and run on as many “servers” behind the scenes as are needed to get the job done. Everything from hardware to operating systems is abstracted out and made available in a clever and standardized way.

So why not move to a Containerized Solution?

Why Not Containerize?

While you can move almost any workload to a Containerized Solution, that doesn’t mean it will be scalable. Scaling of your application may require some rework in order for it to match the typical scaling patterns for a container. For instance, a healthy scalable containerized application is usually one that can be destroyed and replaced when unhealthy, based on configured metrics, and more loadbalanced containers for the same application can be added or removed as demand and load for your application changes. Some applications are not designed to be loadbalanced or have multiple versions running at the same time. Likewise, not all applications take kindly to being shut down or restarted.

If your company has a lot of web applications, all built and hosted on the same shared infrastructure, it may be more cost-effective to continue running them on a loadbalanced or proxied pool of servers rather than as a Container Solution.

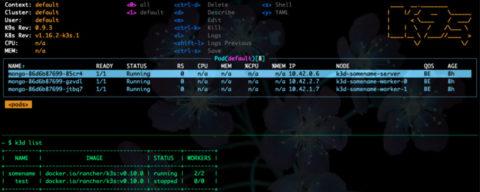

Case in point, let’s say you have a collection of 25 REST-ful Web APIs, all written as Java Enterprise Applications, and they utilize shared user session and authentication mechanisms, as well as a shared Data Management application layer. All 25 API’s share a similar usage profile and resource profile. An AWS EC2 AutoScale Group manages the cluster based on application utilization, which ranges from 3 to 5 servers of the same size. If we’ve already done the hard work of analyzing usage and resource profiles and sizing the cluster, and the usage is well distributed already among the 25 API’s, then whether you are expanding or contracting the cluster based on the API usage, you will need a certain number of memory and CPU resources. If you containerize the 25 APIs into 25 individual containers, whether you run them manually with Docker, or as a set of clustered servers with DockerSwarm, they will most likely still need the same number of memory and CPU resources, and need to run on roughly the same number of servers, equating to the same cost. In fact, it will be more expensive for your organization once you factor in the cost to containerize all 25 APIs and rework how the shared services will be made available and utilized by your applications. Likewise, the cost goes up further on any Kubernetes architecture, which also needs to account for at least one additional server as a separate control plane.

For Most Customers…Containerize

However, if the usage profiles for those 25 APIs differ greatly, or there are larger or much more frequent fluctuations in usage between those applications, or if the usage profiles cannot be easily assigned to typical autoscaling metrics, then running those applications as separate containers, on DockerSwarm or Kubernetes, may in fact save you money. Kubernetes, for instance, allows far greater flexibility to distribute and scale individual applications across the entire cluster, including horizontal container scaling within the servers that make up the cluster, as well as horizontal scaling for the servers themselves.

Now, what if those 25 APIs are not all the same programming language or application architecture? Mixing and matching application architectures on the same server can sometimes be tricky, and may even need to be split out onto separate servers. This could equate to multiple clusters of servers, one for each programming language or application architecture, which is more expensive than under one application architecture. However, if you can isolate the application system resources and dependencies down to a single application, and separate them from the hardware resources needed, you can now mix and match multiple application architectures, and run them together on the same server. Bam, say hello to Containerized Solutions.

The Power is in Your Hands

Ultimately, you want an infrastructure and process in place to develop, build, and deploy your applications, that is easy, fast, and efficient for your organization, and Containerized Solutions are just one of the many tools at your disposal for doing that.

If you would like to talk more on this and similar topics and how they might work for your organization specifically, I would love to consult and help your organization succeed by building the right products even better.

0 Comments