Introduction to Container Security

Containerization brings many benefits to an organization and the environment. They allow much more rapid testing of features for developers and an ability to produce a product that can be deployed into a development or QA environment easier than a traditional application installer. In general, containers are fairly simple to build, create, and distribute to many different types of systems, and are easier to deploy with an orchestrator or platform. The benefits do come with some limitations, however, such as requiring Microsoft Windows hosts to build Windows containers or using base containers of the correct target architecture (amd64, arm, etc.). For any of their drawbacks, containerization allows for rapid software, product, or tool implementation. What’s not to love?

As with all technologies, containers are not immune to cyber threats and they must be managed a bit differently than non-ephemeral systems such as VMs, cloud deployed instances, hyperconverged stacks, and standalone or clustered physical systems. Software and libraries are constantly evolving and being updated, both application and container dependencies as well as any supporting software in the container. This is more impactful in larger base images compared to smaller, more purpose built ones.

The tradeoff is with fewer built in tools it can be harder to build or debug the container. You may be left wondering where is my bash? Using these smaller base images may be reserved for specific use cases such as a statically linked application (eg: go) or specialized container environments such as Distroless base container images built specifically for running Java applications (eg: google/distroless-java-11).

Containers natively can perform network communication with other containers (in their namespace on an orchestration platform or using the default bridge if just using Docker), without any hindrance if network policies and a network Container Network Interface that enforces network policies. Of course, these policies need to be maintained and updated over time, too. Containers are often deployed into clusters with mixed data sensitivities or into clusters with a mix of public/private resources breaking the traditional network model of a separate network (IE. DMZ) for resources that can be reached from untrusted and public networks such as the internet. This isn’t necessarily a problem, as long as strict network policies and node taints are applied to enforce data boundaries, and none of the data contains regulated data such as PHI, PII, CUI, unencrypted/reversible encrypted credit card information (excluding tokenized card data), etc. As an aside, containers solved that whole updating-of-things problem, am I right?

Additionally, because of the perception of a security boundary for containers, all binaries (programs/applications/scripts/etc) are usually run as root inside of a container, which is problematic and something we will explore later in this article.

For containerized applications to use encryption, they need certificates, secrets, keys, and other types of credentials. Baking these into containers is really bad practice. Just put the key under the doormat, for anyone to find! Some of this can be dealt with by utilizing a service mesh and/or Kubernetes secrets, though the latter is not mature or very good at protecting sensitive information.

Service meshes bring more features and also more complexity. They can be used to secure communications from prying eyes using mutual TLS, but that also complicates some network troubleshooting techniques. Observability allows for log aggregation, monitoring (performance and security), and other types of information to be forwarded to platforms that allow the visualization and parsing of this data to aid IT operations, developers, IT SecOps teams, and others. This is very important given most applications are running as root, often without appropriate data boundaries, and having secrets/keys/certs being managed in a myriad of different ways. Containers can be exposed to untrusted networks, such as the internet, especially when not thinking about data boundaries.

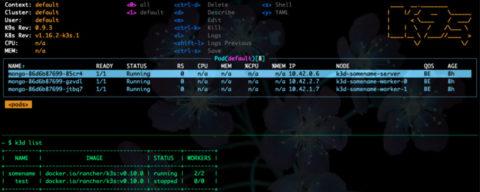

Container security is a complicated problem. There are many articles about what to do to help secure containerized environments. However, let’s dive a little deeper and discuss the top five things that break security in containerized environments.

Anti-Patterns

Not Configuring or Enforcing Data Boundaries and Pretending Containers are a Data Boundary

Containers have wrongly been seen as a security boundary. Deploying data divergent workloads on the same cluster, with minimal intra-cluster communication or node restrictions, has not been considered a significant security issue. However, this is false. At a basic level, containers share the host kernel and any vulnerabilities or weaknesses that exist in that host kernel. Furthermore, Docker or any other container runtime must be granted SYSCAP privileges from the Linux host. SYSCAP are administrative privileges that allow the processes that inherit them to go around, override, ignore or otherwise control certain security restrictions and administrative functions on the host. By default, Docker grants 14 specific SYSCAP privileges, allows the processes to override protections and security controls in the Linux Kernel such as Mandatory Access Control and Discretionary Access Control, write files anywhere on the filesystem regardless of ownership, etc. Now, that doesn’t necessarily mean that the processes inside of the container get those privileges.

With an insecure configuration, or when paired with one of the many vulnerabilities in Docker and the Linux Kernel, those SYSCAP privileges have allowed what is called “container escape”. This is when a process in the container can begin to affect and alter the host in some fashion. Here is a very in depth explanation of how containers can be exploited with system capabilities by Cyber Reason. When performing penetration testing for our clients, we look for such misconfigurations in containerized workloads. Oteemo has successfully exploited containers to get host root access during penetration tests. Running containers as “privileged” means they get ALL system capabilities and it’s easier for an attacker to exploit that to gain access to the host or even pop a root shell.

This does not sound like a security boundary!

Using Too Many “Security” Tools

Purpose built container security tools are very helpful but traditional vulnerability scanners are not very useful for assessing containers and container orchestrators. There are many great vendors out there and Oteemo has used most of the tools that exist. Generally, these tools require greatly enhanced privileges over other types of containers (IE. web applications, database containers, etc). The problem isn’t that security tools are “bad” or not worth using. The problem is that these tools need so many additional privileges on the host(s) and cluster, that they introduce additional surface area for a threat actor. Of course, the benefit that a tool brings needs to be greater than the security risks created by installing that particular tool, and for the most part that is the case. For every tool that is implemented that requires enhanced privileges on the cluster, container, and hosts there is an increased risk that a threat actor could take advantage of the additional attack surface area or vulnerabilities those applications and containers contain. Security tools aren’t free of security problems either! Now we have a greatly expanded attack surface for every security tool AND new vulnerabilities in the cluster for every container running as part of that security tool or platform. Security tools are often very useful and using one or maybe two is a good trade-off, especially when you can mitigate some of the risks.

However, using more than one or two is really just opening up the cluster, hosts, and containers to additional risk for very little, if any, benefit. This is not a “throw the kitchen sink at it” deal. Strategic use of security tools in a cluster is important. Ensure that you remove any tools that don’t have real impact, and double up on functionality without adding critical functions that you absolutely need, or don’t impress you. A lot of companies buy tools and implement them and then walk away without much further thought. This IS a security risk, not a mitigation of other risks!

Utilizing Service Mesh Without Observability & Monitoring

Service meshes such as Istio are excellent tools. We can use these service meshes to encrypt all of the traffic inside of the cluster AND we get mutual TLS. This ensures each node authenticates to any other node it tries to connect and transfer data with. This means both the “client” and the “server” authenticate via certificates. As a result, we can worry less about critical data being eavesdropped on as it flows through the cluster.

Anything that hasn’t been blocked from communicating via the use of CNI network policies shouldn’t be able to decrypt or read any of the data on the network, or authenticate to other services and containers, even though the network policies would (incorrectly) allow such eavesdropping without a service mesh. This is a wonderful addition to every Kubernetes cluster. Unfortunately, there’s a catch:

Since all of the traffic is encrypted, that also means we cannot see any malicious or suspicious traffic inside the cluster without security and monitoring tools!

Uh oh. Those security tools we installed to give us observability might no longer be able to function as they were intended! Sometimes these security tools can be configured to give us that observability, and other times that’s not as feasible. It is very important to set up observability and logging from each container (which also requires additional privileges in the container/host, as per the previous section) in order to aggregate what is happening inside the cluster to an aggregator such as Grafana Loki or ELK stack. Similarly, we need a container or tool that can capture the network traffic (like netflow) so we can visualize and inspect the traffic in the cluster. This means this container or tool will need to have the root certificate(s) that are the base of all of the ones used for mTLS in the cluster AND have the system capability CAP_NET_RAW linux privilege to capture and forge traffic not intended for that container. Ah, the surface area expands! We now have completely unmonitored traffic inside the cluster network which is perfect for attackers to exfiltrate data, install cryptocurrency miners, and use those containers as part of a botnet if they can hijack legitimate containers or get malicious containers deployed in your cluster. Just like on the physical network, we should be doing packet sniffing, TLS decryption, and packet inspection when we use a service mesh inside of a Kubernetes cluster. The same networking concepts apply so we are not just unwitting victims, but we need to ensure we are not also assisting an attacker in creating additional malicious traffic in the cluster and container network. An attacker could use your own containers to DDoS another system in the cluster, other systems outside of your cluster on your network, or an external target.

Using “Small” Images Without Good Supply Chain Management

This is not to pick on or support any specific container ecosystem. Using “smaller” container images, or ones that have “fewer vulnerabilities,” that do not have mature supply chain management is not a “better” solution. Scanning tools don’t support every ecosystem and this goes for both SBOM tools and vulnerability scanners. Make sure you are using a container image that is fully supported and don’t necessarily trust vendor claims. Do your own research and dig into the results to determine if they match what the vendor states and if the container gives you what you expect and need. Additionally, vulnerabilities aren’t the only threat. Multiple times malware has been found in upstream containers. There are multiple vectors for this:

- The container registry gets breached and containers are modified by an unauthorized party.

- The developers’ system or source code repository is breached and malicious code or programs are added.

- Upstream dependency is malware (NPM and pip have hosted malware-laced packages) or is re-packaged and built to contain malicious code or malware.

- Intentionally uploading a malicious container by threat actors.

- Use your imagination, threat actors are creative!

According to the security vendor, Sysdig, there are over 1,650 malicious containers hosted on Dockerhub! Yikes. Palo Alto’s Unit 42 discovered something similar and discovered specifically 30 containers that had cryptocurrency miners, unbeknownst to those who downloaded those containers. It’s great to get a “clean” vulnerability scan, but the problem of container security and a mature ecosystem is far bigger than just the vulnerability scan results. Make sure you are doing malware scans and analyzing whether a “small” image fits your organization’s security posture based on the who and where the containers are being authored and whether or not they have a mature software management supply chain.

Baking Secrets Into Containers

Obviously, we all want a site to be ‘https’ (secured with TLS) instead of ‘http’ (unencrypted) and have authentication for the APIs a containerized piece of software is exchanging data with. However, baking these API keys, certificates and other secrets directly into the software container is a really bad idea! It is pretty easy to inspect containers and also copy files into and out of them when they are running (or as a disk image using certain docker or podman commands).

Secrets should remain that – secret. When uploading to a registry, all of the files in that container can be accessed or inspected by downloading the container. What that means is the secrets that are hosted in the container can simply be taken out. Secrets should be stored in specific tools that are made for this purpose, and storing these secrets as “secrets objects” in Kubernetes is not secure by default either. Storing secrets in etcd without encryption is even worse. Researchers in Germany recently discovered that tens of thousands of publicly available containers are leaking authentication credentials, API Keys, certificates, and other types of secrets. According to these German researchers that’s almost 10% of all containers hosted in public registries! Think about all of the SaaS providers your organization may use. Do you know if they use containers that are leaking secrets that could lead to your data being stolen or compromised? How about your internal environment? Do you provide such services? If client data is stolen, there can be massive liability.

I hope this gave you a good overview of some of the practices we have seen that can actually hamper the security of a containerized environment while internal teams might think they are “doing all the right things”. I can’t provide a prescriptive solution for all of these anti-patterns. The goal of this article is to help you understand the consequences, both positive and negative when managing a containerized workload or workloads. You need to perform a risk and benefit analysis of all of your practices and which tools (and how many) are appropriate for your situation. Oteemo’s general guidance is:

- Run containers as non-root users inside the container.

- Run Docker (or any container runtime) as a non-root user on the host.

- Run Docker (or any other container runtime) without SYSCAP privileges if possible. Add specific privileges only when absolutely necessary.

- Use a service mesh and node taints to isolate containers requiring root access and/or SYSCAP privileges to specific, specialized “privileged” nodes where possible. This does not imply trust, just limiting attack surfaces and isolating damage.

- Patch the hosts kernel frequently (Control plane and worker nodes).

- Separate data by tainting nodes and enforcing data boundaries.

- Use a service mesh to enforce mTLS but make sure to enable observability and monitoring so YOU can decrypt and inspect the traffic.

- Balance using “small” images with good supply chain management. Amazon Linux 2 containers have become my personal favorite. Reasonably sized, updated, and very clean vulnerability scans. It maintains binary compatibility with RHEL and CentOS. For Debian based specific purpose containers consider Google Distroless.

- Choose your security tools carefully. Unless you need a specific function from a new tool that covers a compliance or serious capability gap, try to limit runtime security tools to one or at most two.

- Scan containers for vulnerabilities and malware prior to uploading to an internal registry or deploying. Re-scan containers while they are running in the cluster regularly. Amazon Inspector, if in AWS, is a really useful cloud based service to scan containers in ECR.

- Run containers, and all systems and services, internally except with specific exceptions for content you are intentionally trying to serve publicly. A new campaign has been launched which brings this to a head. A threat actor, TeamTNT, is attacking publicly exposed services to steal credentials, deploy malware, install cryptominers, etc. https://www.darkreading.com/cloud/aws-cloud-credential-stealing-campaign-spreads-azure-google

- Enable observability and logging for all workloads in the cluster including system logs.

- Use containers that have updated applications and libraries. Rebase if necessary to upgrade or remove things like root or use a trusted partner, like Oteemo! We can rebase and host upstream or internally developed containers.

- Use a CNI that enforces network policies AND configure them appropriately for your environment.

0 Comments